Within the context of our data visualization project my role was mostly to develop a framework with which the other members of the team could implement their graphical ideas. To accomplish this goal I developed a Python module to contain the data retrieval and basic structure of distributed rendering and provide a simple hook for other people to plug their renderers into. Also provided is a hook for a parsing function used to transform the datasets retrieved into a smaller or refined data set before being passed to the renderer itself. By abstracting away the data retrieval and distributed nature of the program this framework allowed rendering classes to be implemented without concern for the retrieval of data or the distributed nature of the cluster. The python module itself is available here.

The module itself is divided into two main source files and three classes, the main of which is the MPIScope class within MPIScope.py. This is the user facing class which handles initialization and setup of the program. When provided with a rendering object and a dictionary of keys with URLs to retrieve data from (and optionally a parse function and delay time), the MPIScope object will handle the communication between nodes and synchronization of the different displays.

A simple example is provided with the module and is repeated here.

from mpiscope import MPIScope

from mpiscope import DummyRenderer

urlList = { "gordon" : "http://sentinel.sdsc.edu/data/jobs/gordon"

, "tscc" : "http://sentinel.sdsc.edu/data/jobs/tscc"

, "trestles" : "http://sentinel.sdsc.edu/data/jobs/trestles"

}

def parse(data):

print(data)

return data

mpiScope = MPIScope(DummyRenderer(), urlList, parse, delay=60)

mpiScope.run()

In this example a new MPIScope instance is created from a DummyRenderer, a list

of urls for supercomputers at the SDSC, very basic parse function that simply functions as the identity,

and a delay of 60 seconds. In this case the parse and delay arguments are largely redundant;

parse simply prints its output for debugging purposes and by default delay is 60 seconds.

After this instantiation the program is run by the invocation of mpiScope.run().

The parse function here requires some elaboration. The "data" it receives is simple the urlList dictionary with the URLs replaced with a dictionary of keys and values corresponding to the JSON data retrieved from the URL for each key. The parse function can them be used to filter or reduce this data set before it is given to the renderer. A final thing of note about this function is that it and the data retrieval itself runs on a separate thread than the renderer. A result of this is that if a data set is sufficiently large and your hardware is sufficiently weak it may help performance to offload this data processing to another thread.

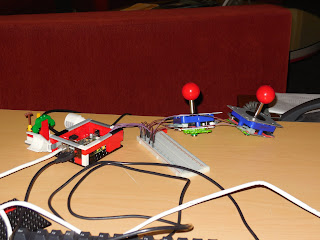

For our purposes this MPIScope class seems to fulfil its purpose well enough, but we haven't had a chance to test our work on the actual Raspberry Pi cluster we are targeting. Some of the implementation details are somewhat sketchy, but until it is running on the actual target hardware I don't believe it to be worth attempting to optimize it. For the moment the public interface is fairly simple, but one pain point I've already noticed is the lack of a simple way of retaining old data sets when new data is obtained. The solution for now is to store the older data sets in the Render object in the renderer's draw method, but this is far from ideal. At this point the problems I see with the module don't seem serious enough to complicate the class to fix.

The other important file in the module is the DummyRenderer file and class. While this class shouldn't be used in a real application, it does provide an example of how a renderer class to provide to MPIScope should look. The comments in the file are fairly self explanatory, but essentially the start method should be used to create a graphics context, draw should be used to do any actual drawing based on the data set, and flip should be used to notify your graphics library to display the newly rendered buffer. This project was a large learning experience for me for two major reasons. Firstly, learning how to program a cluster of computers is very new to me and I learned what I did while working on this module. Secondly, and perhaps most importantly, this was my first time working on a real team programming project with clear division of labor. I'm used to having complete creative control over my projects, so learning to allow others to do their part without interfering took effort on my part. Another part of this challenge for me was in having to think more deeply about the API I created than if I was working alone; not being able to break things whenever I wanted required more thought about my changes and how they would affect the other project members. This requirement of communication and cooperation made things more difficult at first, but eventually made the project less stressful as I learned to focus on my parts of the project rather than the project as a whole.